How to TroubleShoot Long Running SQL Server Queries

Step 1 - Identify the Long Running Query

So the first issue is how to identify long running queries. SQL Profiler can be a very good way of doing this. Of course a way to identify long running queries is good old fashioned manual detective work - eg getting a copy of the production code and database and running in VS in debug mode and seeing where it is going slow. Prior to this though you will no doubt have received complaints from users about part of a site or application being slow which will point you in the right direction.

In SQL Server 2008, SQL Profiler is a seperate program which is activated from the start menu or from the Tools menu in SQL management studio.

When you first load up SQL Profiler you will then have to create a new trace using File, New Trace. You then select the database you wish to connect to and enter any necessary credentials.

The trace dialog box will then show and this will look like this:

SQL Server Profiler traces may be configured in two ways. Firstly you may specify events that you want to capture and log an entry for. Secondly when an entry is logged you may select which columns of information you want to see.

You configure these options by clicking on the Events Selection tab. The screen will then look like this:

The screen initially shows the most common events and columns you may need but you may also tick the Show all events and Show all columns checkboxes to get the full list to choose from. Warning thought the full list is quite daunting!

Back on the general tab there is a drop down list of templates which you may choose when creating a new trace. A template is just a preselected grouping of events and columns which has been saved with a suitable name which describes its typical purpose. You may or may not find choosing one of these useful so feel free to try a few out and see what information you get. The template called TSQL_Duration for example is good for quickly and easily seeing how long TSQL operations take.

So the Duration column is going to be very important for identifying long running queries. You are able to specify filters on the columns you have chosen so that you can for example only view actions against a particular database or from a particular username. This will probably be needed as you will most likeley have many databases running on one database server and you wil other wise get a lot of noise making it difficult to focus on the events you are interested in.

So if you want to filter by columns to show just a particular database for example then you can do this either when you create the trace or after it has started running. If you are going to modify trace properties after it has started running then it is usually best to stop the trace, modify its properties and then start it again. On the toolbar there are the usual iconic buttons for stop, pause and play as well as the properties button as shown below.

So in order to filter by columns you need to get at the trace properties screen either when you are first creating it or after it has been created via the properties button. From there you need to select the Events Selection tab. On there will be a Column Filters button. Click this and then you can set criteria to filter by. The interface is failry obvious and intuitive for example in the screen shot below the filter is only showing events for a database called KatnipForum. There is also a filter on the application name which has been cleverly set by SQL profiler to not include traffic originating from itself. You can see which columns have filters on by the blue filter icons next to the column names in the list.

So back to the original point which was how to identify long running queries. Well we now have the skills to do this by setting a trace running against just the database we are interested and we can also put a filter on the Duration column. We also need to consider if the query is a stored procedure or if it is dynamic sql as these might not both show up in the trace depending on how we configure the filters. So the first thing is to get a trace running which selects the appropriate types of statements whether they are TSQL or stored procedures. Then as long as we selected the Duration column we can then put a filter on that to only show events which take longer than a certain amount of time.

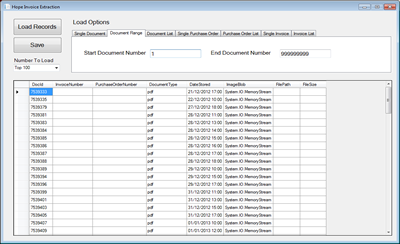

Below is a screenshot of a trace which includes the Duration Column. When you click on a row in the event list, the corresponding SQL is show at the bottom so this makes it very easy to see what is going off.

You can easily see from this screen shot that there is an operation whose duration value is 318. This is not a slow query at all but we can certainly see that it is much slower than most of the others. But what does the 318 mean? Is that 318 miliseconds, nanoseconds, clock cycles or something else?

Well to answer this question I'm a great believer in the think for yourself approach. We could and google this but why not exercise our grey matter?

Ok so I ran a few queries and got a snapshot of a trace as follows:

I then copied and pasted this data into Excel and deleted the columns I wasn't interested in. I really just wanted the Duration, Start Time and End Time. So I pasted these into Excel and formatted the time data to show milliseconds as well as seconds. I then added a fourth column called Time taken and I put a formulae in there so Excel would subtract the start time from the end time giving us the time taken displayed in hours, minutes, seconds and miliseconds. The end result looked like this:

If you then compare the Duration column with the Time taken column we can then start to see there is a pretty obvious match as shown below:

And so we can now clearly see that the Duration column is in miliseconds so when we see 25063 we read this as 25 seconds approx and when we see 1880 we read this as 1.8 seconds approx.

Ok so now we can put an informed value on the filter for our Duration column to identify long running queries. What this value is depends on such things as your application, hardware, number of active users etc but it might be ok to have queries running in less than a second but maybe anything over 3 seconds is bad for example so you would just put a trace on to filter only durations greater than 3000 miliseconds.

Ok so now we have identified our long running query and so onto the next part, how to troubleshoot why it is being slow.

Step 2 - Troubleshoot the Long Running Query

Ok so now we have identified our long running query we can start to look at why it is running slow. You can almost always be sure though that most slow running queries are in some way related to the amount of data. I'm not saying that data is at fault but maybe a problem doesn't manifest itself until larger amounts of data get into the system. Consider this website for example. Each of these articles that I write is actually stored in a SQL Server 2008 database. The content is stored in a column defined with a text data type. I might decide to allow users to search for articles by keyword and to do the search I might use the following kind of statement:

Select ArticleID, Content From PaxiumArticles Where Content Like '%IDisposable%'

Where IDisposable is the keyword that the user searched on. When this website is fairly new I will start off with a few articles which will grow into dozens and eventualy into hundreds and maybe even into thousands. When I have a fairly small number of articles then this search will be very quick. I tried running this query will about 1,500 articles and I was getting a query time of about 6 seconds. I added further test articles so I had about 3000 articles and tried the search again. This time it took 16 seconds. So this is a classic example of how a query does not manifest any slowness until we get a reasonable amount of data in there.

So what is a good process to through to diagnose why the query is slow? Well first get a copy of the database system it is being run against. If this is a production system then you will normally have a copy of that to develop with. It needs to be as close a match as possible to the production system including the data and the amount of data. Once you have this then get a copy of the offending piece of SQL and paste this into Query Analyser.

Next the first thing I do is just run the SQL and confirm how long it is taking. You can see this in Query Analyser in the bottom hand corner as shown below:

If this was a query with multiple where conditions I might put them all on seperate lines and comment them out. I would then run the query and one by one uncomment the where clauses and re run the query until the slow culprit revealed itself.

With the example above though there is only one part to the where clause so we cannot do that. Now the reality here is that experience can tell you what is wrong with many statements and most developers would probably spot what is wrong with the above statement right away just through experience.

What is actualy wrong with it is that in order to execute the sql statement the query engine has to look at every row in the table and see if it contains the word IDisposable and it if does then include it in the query results. If your table has 100 rows then 100 rows need to be examined and if your table contains a million rows then a million rows have to be examined!

An experienced developer might do a count of the rows in the table and see how many are there then they may make a few copies of the table. Lets say the table contains 10,000 records then we could quickly create copies of the table with say 5,000, 2000, 1000, 500 and 100 records in We could then run the query and if we saw quicker and quicker results as the table sizes got smaller then we can confirm our suspicions.

Another way to identify what the query is up to is to display the estimated execution plan. We can do this in Query Analyser by clicking Display Estimated Execution Plan from the Query menu of Query Analyser and for our above example it looks like this:

Now in our simple example this doesn't tell us much that we probably didn't already know but with more complex queries it can give you valuble clues as to what part of your query is holding things up. Also when you are viewing an estimated execution plan, you can hover your mouse over one of the operations and you get more vital information which can help you to solve the problem as follows:

So how do we speed up such a query? Well when we are searching for a phrase within a larger piece of text then normal indexes will not help us. What we need to use is the full text indexing feature of SQL Server. How this works in simple terms is as follows:

SQL Server will have an index of all the words in all the content column of all records in the PaxiumArticles table. This index will be maintained automatically in the background. So we choose to search for the word IDisposable. SQL Server looks this word up in the index table. This is very quick as all the words in there are kept in alphabetical order so a binary chop search algorithm is used which is very fast.

For more information on binary searches see http://en.wikipedia.org/wiki/Binary_search_algorithm

So SQL server has found one ore more rows in our index table with the word IDisposable. It then reads the ArticleID which is also in these rows. It then does a select against the main table where the ArticleID is in the subest it has found. This again does a binary search as the main table has a clustered index by ArticleID as ArticleID is the primary key and so the results will be much much quicker than before.

As an example I applied such a full text index to my PaxiumArticles table and now the query takes around 2 seconds instead of 16 seconds. Whats more if my PaxiumArticles table grows to 6000 records then the original search technique will probably double to around 30 seconds where as the full text index technique might up to maybe 3 seconds at worst. Consider also that my database server is quite an old piece of hardware with not much processing power or memory so on a more modern server these times will be miliseconds rather than seconds.

Instructions on how to setup full text indexes are beyond the scope of this article but the following is a good link:

http://blog.sqlauthority.com/2008/09/05/sql-server-creating-full-text-catalog-and-index/

Do bear in mind though that once you have a full text index setup, you no longer use a Like statement but rather you use a FREETEXT or CONTAINS statement as follows:

Select ArticleID, Content From PaxiumArticles Where Contains(Content, 'IDisposable')

Select ArticleID, Content From PaxiumArticles Where Freetext(Content, 'IDisposable')

In the above example both will actually return the same results. The difference is that Contains does just what it is told whereas Freetext performs some "Magic" - ie it will search conjugations of verbs as well as many other things. Lets say I run the following statements:

Select ArticleID, Content From PaxiumArticles Where Contains(Content, 'roll')

Select ArticleID, Content From PaxiumArticles Where Freetext(Content, 'roll')

In this case the Contains statement returns no results but the Freetext statement does as I have some articles with the word "rolled" in there! Clever stuff. Bear in mind that Freetext is more expensive though so only use it if you need to.

One more gotcha to be aware of is if we ran the following statement:

Select ArticleID, Content From PaxiumArticles Where Contains(Content, 'into')

In this case my database returns no results. This is because SQL Server is configured with a list of noise words - ie words to ignore in searches which would otherwise make the search algorithm much more complicated. These are words like a, is, the etc and unfortunatley the word "into" is one of these so even though I have articles with "Insert into.." in there this will return no results.

Now you might imagine that these noise word would be found in some system table somewhere in SQL Server but in fact they are in a plain text file in the file system. I don't know what the reason was for this, probably a logistical one to do with release schedules rather than a pureley software driven reason. Anyway you can find my list at the following location and yours will be something very simillar depending on how many versions of SQL server you have installed:

Another common scenario where a query can run slow is due to a lack of a normal index.

Consider the following SQL statement:

Select * From Users Where Surname = 'Broening'

I ran this on a table I had created with test data containing about 9 million records. Quite a lot I know but not unheard of in fields like banking, government, social media etc. On my system this took 17 seconds to execute and this is clearly unacceptable. The reason this takes so long is because the table is ordered by its primary key which is UserID as shown below, then a full table scan is needed to find the record as it could be the first record, the middle record, the last record or any other record!

If we paste this SQL statement into Query Analyser and click on Display Estimated Execution plan from the Query menu then we get the following response:

So from here we can see that there is a table scan and that it makes up the bulk of the workload and in fact in this case then Query Analyser is suggesting that we create an index on the Surname.

We can create such an index either with SQL Statements or via the GUI of SQL Server Management Studio. Before we look at this though, note that Query Analyser is suggesting that we create a non clustered index. In SQL Server (And other database systems) we can have clustered and non clustered indexes. A clustered index would be the index which is created when you create a table with a UserID of type int which is auto assigned. The actual table itself is kept in order of UserID so any searches by UserID can seach the table itself as it is kept in the right order. If we create any other indexes then these will be non clustered. Say for example we create an index on Surname then SQL Server will create a table for that index and it will consist of two columns. The first column will be Surname and the second column will be UserID. The table will ke kept in surname order and automatically updated when we add or delete or amend records in the main table. This way if we need to search by Surname then the index table will be searched and this will be very fast as the table is in Surname order. Then once the record is found then the UserID can be read from that column and used to select the main record from the main table again very quickly as the table is stored in UserID order.

In the above screenshot you can right click on the missing index text and click on Missing Index Details. This will show you the suggested SQL statements needed to create the missing index.

In the above case this will look like this:

/*

Missing Index Details from SQLQuery1.sql - Tiffany.Paxium (sa (52))

The Query Processor estimates that implementing the following index could improve the query cost by 99.9344%.

*/

/*

USE [Paxium]

CREATE NONCLUSTERED INDEX [<Name of Missing Index, sysname,>]

ON [dbo].[TestContacts] ([Surname])

*/

This looks more complicated than it is as there is lots of bumf there. The really important part is this:

/*

CREATE NONCLUSTERED INDEX [<Name of Missing Index, sysname,>]

ON [dbo].[TestContacts] ([Surname])

*/Translating this to a real statement would then look like this:

CREATE NONCLUSTERED INDEX [IX_Users_Surname]

ON [dbo].[Users] ([Surname])

The sysname part of the original syntax come from SQL Servers use of templates. To explain this open up SQL Server Management studio and click in the View menu and then click Template Explorer. This may of course already be displayed depending on your configuration. The template explorer is a big collection of ready made SQL code which you can use for comon tasks such as creating a database, doing a backup, creating an index etc. They are grouped into categories as shown below:

If you expand one of the categories such as Index and double click on Create Basic Index you will the following code:

-- =============================================

-- Create index basic template

-- =============================================

USE

<database_name, sysname, AdventureWorks>

CREATE

INDEX <index_name, sysname, ind_test>

ON

<schema_name, sysname, Person>.<table_name, sysname, Address>

(

<column_name1, sysname, PostalCode>

)

In here you will see a number of uses of sysname eg:

To see what this means and how it is used we shall look at a command in the Query menu of Management Studio. Whilst you have the create index template open, click in the Query menu and then select Specify Values for Template Parameters. This will bring up the following dialog box:

By filling in the values in this dialog box, the SQL template code will be replaced with the values you want. The fields which appear in the dialog box are the sysname ones which the code sees when it examines the template. So we can now clearly see that the 3 values for each sysname are used for these three columns to identify the field, it's name and its default value which appears in this dialog box. Its as simple as that but explains why we now saw this sysname stuff in the original SQL code which Management Studio suggested to us to create a new index.

I have used a sensible naming convention here - starting the index name with IX to identify it as an index followed by the table and column name. Some people use naming conventions as follows:

PK_ for primary keys

UK_ for unique keys

IX_ for non clustered non unique indexes

UX_ for unique indexes

Eg <index or key type>_<table name>_<column 1>_<column 2>_<column n>

Ok so back to the code we are going to use to create our index:

CREATE NONCLUSTERED INDEX [IX_Users_Surname]

ON [dbo].[Users] ([Surname])

If we run this statement to create our index and then run our original query statement again (shown below) then we should see dramatic performance improvements. Watch out though, creating the index in the first place may take a while as the whole index table needs to be created. After that though it is maintained as you along - ie when you add, edit or delete a record from the main table then the index table will be updated at the same time to reflect this and this extra update will be very quick. when I created the index on my system it took about 3 minutes so beware it can take that long depending on how many records you have.

Select * From Users Where Surname = 'Broening'

This statement with my new index now runs in a split second so the performance improvement is pretty impressive!

Stuff to here:

- Indexes

- Manually running in Query Analyser and commenting out where clauses then re adding them one by one

http://tonesdotnetblog.wordpress.com/2008/05/26/twelve-tips-for-optimising-sql-server-2005-queries/